Stealth 13 2019 CPU power limits w/ dedicated GPU

Hi,

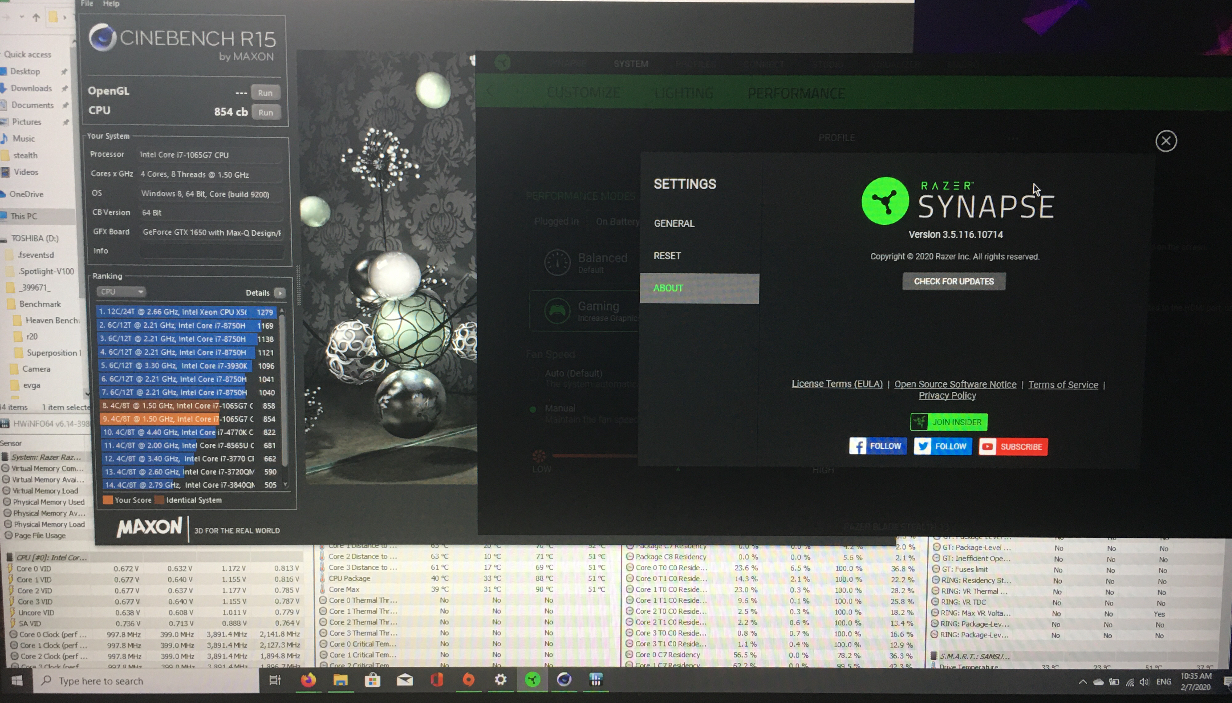

I am thinking to get a razer blade stealth 13 with i7-1065G7 and maybe a dedicated nvidia GPU. To safe some bucks and batter life I am looking only into the FHD version. After reading some reviews the following statement struck me: the version without dedicated gpu can power the cpu with 25w, whereas the version with dedicated gpu limits the cpu to 15w, no matter of the gpu is active or not. See for example (under "Processor").

Is this information really correct and still current? Looks like an artificial restriction to me, maybe a BIOS updated fixed it?

This topic has been closed for replies.

Sign up

Already have an account? Login

Log in with Razer ID to create new threads and earn badges.

LOG INEnter your E-mail address. We'll send you an e-mail with instructions to reset your password.